Neptune Service Shutdown - March 5, 2026

Following its acquisition by OpenAI, Neptune services will be permanently discontinued.

All remaining data will be deleted at shutdown and cannot be recovered.

See timelines, export instructions, and migration guides in the Transition Hub.

Neptune documentation

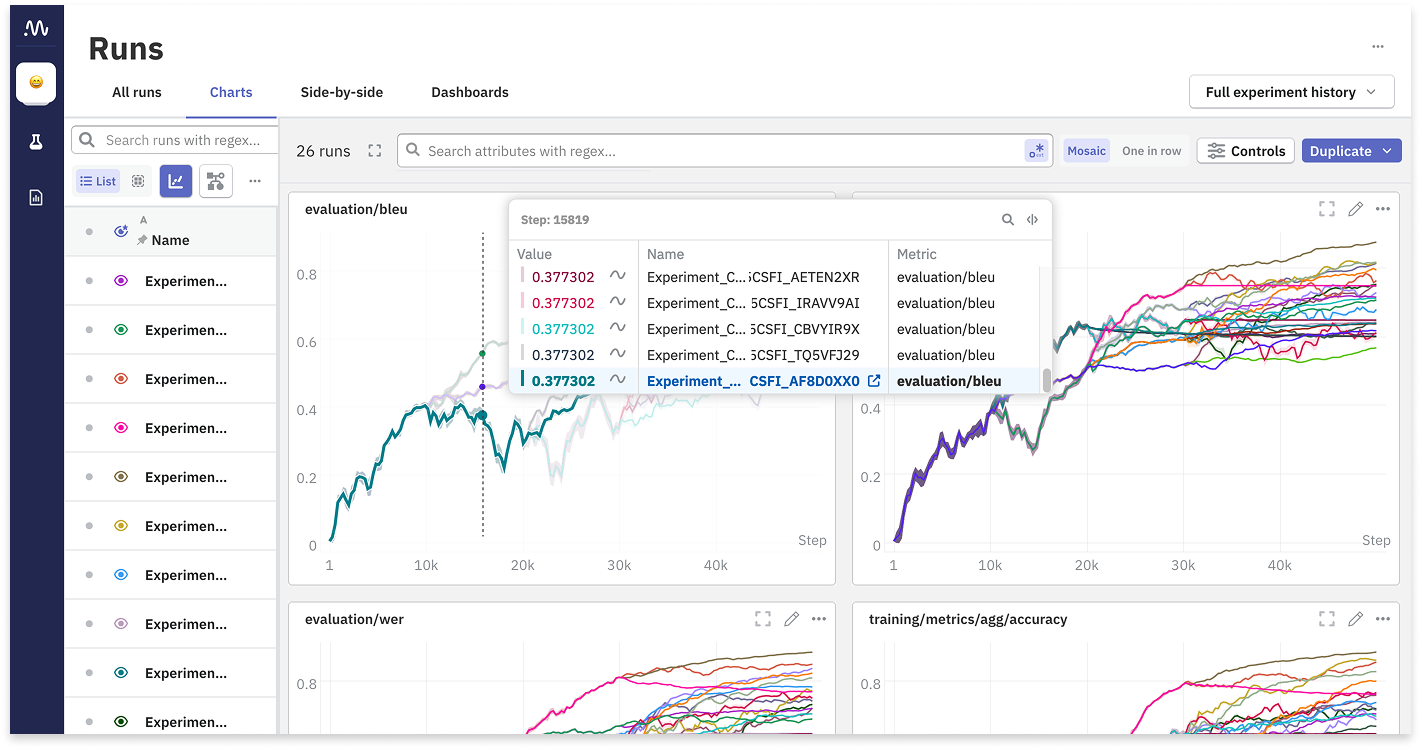

Learn how to use Neptune, the most scalable experiment tracker

for teams that train foundation models.